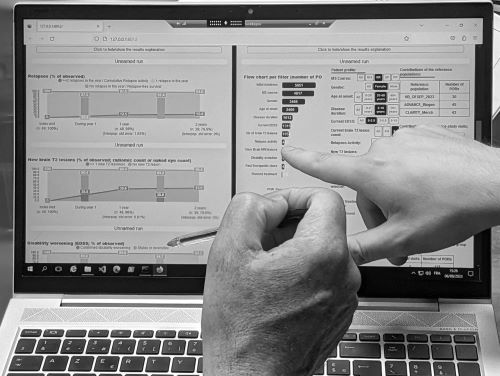

© G.Anichini. Clinical decision support system for multiple sclerosis (Demuth & al., 2023)

Medical decision-support system are increasingly being used in clinical specialties, with a particular focus on automating the various stages of diagnostic and prognostic work. While these technologies are not new, and have been widely use in the healthcare domain since the 1990s, they are now acquiring specific forms linked to artificial intelligence (AI) transformations and massive data exploitation methods. New devices, essentially based on machine learning algorithms, aim to perform a series of heterogeneous operations: classifying medical images, targeting and characterising lesions, predicting patient survival or relapse. These operations are crucial, as they are involved in the therapeutic choices and the care of individuals. Now classic sociological works, which mainly focus on the older generation of decision-support systems - such as expert systems - analyse these objects through such diverse issues as: the standardisation of medical work (Berg, 1997); technology appropriation by users and the way in which the hardware environment authorises or not some cognitive tasks (Hutchins, 1995; Suchman, 1987); the user construction and the power relations it implies (Forsythe, 2001; Woolgar, 1990). The aim of this two days workshop is to consider how these analyses can be renewed, by studying decision-support systems that are currently integrated into clinical practice or under development. To this end, we wanted to call on research in the humanities and social sciences that are interested in these issues and fit into one (or more) of the following line of research:

1) Design work and the values conveyed by decision support systems. If each tool embodies "scripts" (Akrich, 1987), what ideals support these scripts? This section will look at how the clinical decision-making process is imagined through technology, and how patients (and their diseases) are described, represented and measured. We will look at the extent to which technical choices are linked to legal and regulatory considerations, whether real or perceived. In general, and based on what computational tools make visible or invisible (Jasanoff, 2017), we wish to understand how these tools act as witnesses to specific socio-technical ideals.

2) Clinical uses analyses: appropriation and resistance. Algorithmic systems rely on quantification that can be challenged, contested, hijacked or adopted by clinicians and healthcare professionals. The new uncertainties emerging from their use call into question the relevance of automated operations faced with to elements not quantified by the machine, but taken into account by clinicians in their daily work (Anichini and Geffroy, 2021), or the use of results obtained from technologies in the relationship with the patient (Geampana and Perrotta, 2023). Based on reflections on various medical contexts, we would like to highlight different articulations or oppositions of these tools relative to local norms. What representation and implementation of ethical standards like independence and responsibility could be modified here? Under which conditions do healthcare professionals consider decision-support systems to be "objective"? What kind of work does this entail? How does the introduction of these tools redefine medical expertise, and what kind of collectives accompanies it?

3) What can we do with the lived experiences? When the use of decision-support systems is introduced into a medical consultation, how can the doctor-patient dialogue evolve? What different strategies/rhetoric can the clinician employ? What is the tool used to? How does the patient receive or perceive this intermediary? How does this tool concretise or subvert the codecision principle? This section draws on empirical research looking at lived experiences of the use of medical decision-support system in doctor-patient relationships. How do these tools modify/transform these relationships, and if so, in what way? What are the consequences, for example, for the moment of diagnosis, which can now be based on the algorithmic projection of a disease trajectory?

These days will take place, face-to-face only, on 16 and 17 May at the MSH Ange-Guépin (Nantes) and are organised as part of the research project entitled "Study of the MS Vista algorithmic medical decision support system" (coord. M. Lancelot), funded by the Fondation pour l'aide à la recherche sur la sclérose en plaques (ARSEP). This research is a continuation of the DataSanté programme (coord. by S. Tirard), which will be closing at the same time.

Scientific and organisational committee: G. Anichini, M. Lancelot, S. Tirard, S. Desmoulin, X. Guchet

Bibliography

Akrich M., 1987, « Comment décrire les objets techniques? », Techniques et culture, 9, p. 49‑64.

Anichini G., Geffroy B., 2021, « L’intelligence artificielle à l’épreuve des savoirs tacites. Analyse des pratiques d’utilisation d’un outil d’aide à la détection en radiologie », Sciences sociales et santé, 39, 2.

Berg M., 1997, Rationalizing medical work: decision-support techniques and medical practices, MIT press.

Forsythe D., 2001, Studying those who study us: An anthropologist in the world of artificial intelligence, Stanford University Press.

Geampana A., Perrotta M., 2023, « Predicting success in the embryology lab: The use of algorithmic technologies in knowledge production », Science, Technology, & Human Values, 48, 1, p. 212‑233.

Hutchins E., 1995, Cognition in the Wild, MIT press.

Jasanoff S., 2017, « Virtual, visible, and actionable: Data assemblages and the sightlines of justice », Big Data & Society, 4, 2, p. 2053951717724477.

Suchman L.A., 1987, Plans and Situated Actions: The Problem of Human-Machine Communication, Cambridge University Press, 224 p.

Woolgar S., 1990, « Configuring the user: the case of usability trials », The Sociological Review, 38, 1_suppl, p. 58‑99.

Loading...

Loading...